2024 in Synthetic Data and Smol Models [LS Live @ NeurIPS]

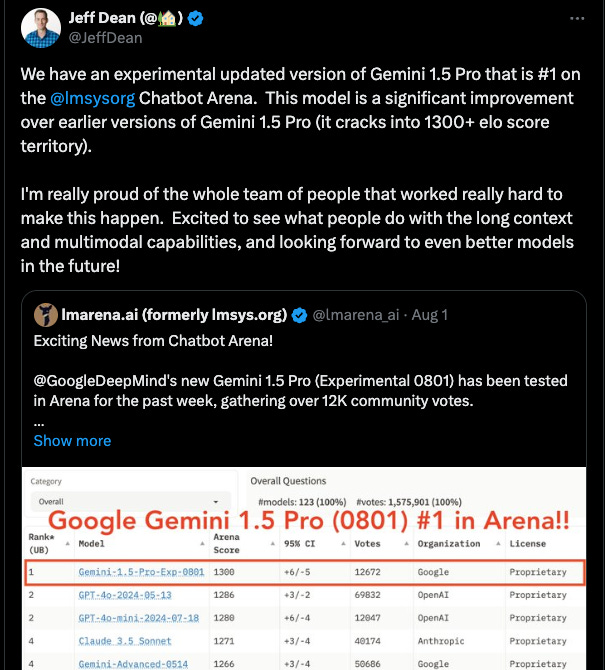

Description

Happy holidays! We’ll be sharing snippets from Latent Space LIVE! through the break bringing you the best of 2024! We want to express our deepest appreciation to event sponsors AWS, Daylight Computer, Thoth.ai, StrongCompute, Notable Capital, and most of all all our LS supporters who helped fund the gorgeous venue and A/V production!

For NeurIPS last year we did our standard conference podcast coverage interviewing selected papers (that we have now also done for ICLR and ICML), however we felt that we could be doing more to help AI Engineers 1) get more industry-relevant content, and 2) recap 2024 year in review from experts. As a result, we organized the first Latent Space LIVE!, our first in person miniconference, at NeurIPS 2024 in Vancouver.

Today, we’re proud to share Loubna’s highly anticipated talk (slides here)!

Synthetic Data

We called out the Synthetic Data debate at last year’s NeurIPS, and no surprise that 2024 was dominated by the rise of synthetic data everywhere:

* Apple’s Rephrasing the Web, Microsoft’s Phi 2-4 and Orca/AgentInstruct, Tencent’s Billion Persona dataset, DCLM, and HuggingFace’s FineWeb-Edu, and Loubna’s own Cosmopedia extended the ideas of synthetic textbook and agent generation to improve raw web scrape dataset quality

* This year we also talked to the IDEFICS/OBELICS team at HuggingFace who released WebSight this year, the first work on code-vs-images synthetic data.

* We called Llama 3.1 the Synthetic Data Model for its extensive use (and documentation!) of synthetic data in its pipeline, as well as its permissive license.

* Nemotron CC and Nemotron-4-340B also made a big splash this year for how they used 20k items of human data to synthesize over 98% of the data used for SFT/PFT.

* Cohere introduced Multilingual Arbitrage: Optimizing Data Pools to Accelerate Multilingual Progress observing gains of up to 56.5% improvement in win rates comparing multiple teachers vs the single best teacher model

* In post training, AI2’s Tülu3 (discussed by Luca in our Open Models talk) and Loubna’s Smol Talk were also notable open releases this year.

This comes in the face of a lot of scrutiny and criticism, with Scale AI as one of the leading voices publishing AI models collapse when trained on recursively generated data in Nature magazine bringing mainstream concerns to the potential downsides of poor quality syndata:

Part of the concerns we highlighted last year on low-background tokens are coming to bear: ChatGPT contaminated data is spiking in every possible metric:

But perhaps, if Sakana’s AI Scientist pans out this year, we will have mostly-AI AI researchers publishing AI research anyway so do we really care as long as the ideas can be verified to be correct?

Smol Models

Meta surprised many folks this year by not just aggressively updating Llama 3 and adding multimodality, but also adding a new series of “small” 1B and 3B “on device” models this year, even working on quantized numerics collaborations with Qualcomm, Mediatek, and Arm. It is near unbelievable that a 1B model today can qualitatively match a 13B model of last year:

and the minimum size to hit a given MMLU bar has come down roughly 10x in the last year. We have been tracking this proxied by Lmsys Elo and inference price:

The key reads this year are:

* MobileLLM: Optimizing Sub-billion Parameter Language Models for On-Device Use Cases

* Apple Intelligence Foundation Language Models

* Hymba: A Hybrid-head Architecture for Small Language Models

* Loubna’s SmolLM and SmolLM2: a family of state-of-the-art small models with 135M, 360M, and 1.7B parameters on the pareto efficiency frontier.

* and Moondream, which we already covered in the 2024 in Vision talk

Full Talk on YouTube

Timestamps

* [00:00:05 ] Loubna Intro

* [00:00:33 ] The Rise of Synthetic Data Everywhere

* [00:02:57 ] Model Collapse

* [00:05:14 ] Phi, FineWeb, Cosmopedia - Synthetic Textbooks

* [00:12:36 ] DCLM, Nemotron-CC

* [00:13:28 ] Post Training - AI2 Tulu, Smol Talk, Cohere Multilingual Arbitrage

* [00:16:17 ] Smol Models

* [00:18:24 ] On Device Models

* [00:22:45 ] Smol Vision Models

* [00:25:14 ] What's Next

Transcript

2024 in Synthetic Data and Smol Models

[00:00:00 ]

[00:00:05 ] Loubna Intro

[00:00:05 ] Speaker: I'm very happy to be here. Thank you for the invitation. So I'm going to be talking about synthetic data in 2024. And then I'm going to be talking about small on device models. So I think the most interesting thing about synthetic data this year is that like now we have it everywhere in the large language models pipeline.

[00:00:33 ] The Rise of Synthetic Data Everywhere

[00:00:33 ] Speaker: I think initially, synthetic data was mainly used just for post training, because naturally that's the part where we needed human annotators. And then after that, we realized that we don't really have good benchmarks to [00:01:00 ] measure if models follow instructions well, if they are creative enough, or if they are chatty enough, so we also started using LLMs as judges.

[00:01:08 ] Speaker: Thank you. And I think this year and towards the end of last year, we also went to the pre training parts and we started generating synthetic data for pre training to kind of replace some parts of the web. And the motivation behind that is that you have a lot of control over synthetic data. You can control your prompt and basically also the kind of data that you generate.

[00:01:28 ] Speaker: So instead of just trying to filter the web, you could try to get the LLM to generate what you think the best we

![2024 in Synthetic Data and Smol Models [LS Live @ NeurIPS] 2024 in Synthetic Data and Smol Models [LS Live @ NeurIPS]](https://substackcdn.com/feed/podcast/1084089/post/153567986/bbef81072a7602f9a124ffd13d17f992.jpg)

![[Ride Home] Simon Willison: Things we learned about LLMs in 2024 [Ride Home] Simon Willison: Things we learned about LLMs in 2024](https://s3.castbox.fm/0c/b6/ce/a4e5992f093b57336ea469ba58d85a340b_scaled_v1_400.jpg)

![2024 in Agents [LS Live! @ NeurIPS 2024] 2024 in Agents [LS Live! @ NeurIPS 2024]](https://s3.castbox.fm/3d/de/82/0d8893949c705b611e5972f65f39c94b2c_scaled_v1_400.jpg)

![2024 in Post-Transformers Architectures (State Space Models, RWKV) [LS Live @ NeurIPS] 2024 in Post-Transformers Architectures (State Space Models, RWKV) [LS Live @ NeurIPS]](https://substackcdn.com/feed/podcast/1084089/post/153556680/f4d6f1a19e9a93b6e342a5bbe8815cd2.jpg)

![2024 in Open Models [LS Live @ NeurIPS] 2024 in Open Models [LS Live @ NeurIPS]](https://substackcdn.com/feed/podcast/1084089/post/153509369/8926d1ae15dfa6cacc2b0cd7158b07d3.jpg)

![2024 in Vision [LS Live @ NeurIPS] 2024 in Vision [LS Live @ NeurIPS]](https://s3.castbox.fm/3e/66/20/159303c2f347870301315dc9e247e2ade0_scaled_v1_400.jpg)

![2024 in AI Startups [LS Live @ NeurIPS] 2024 in AI Startups [LS Live @ NeurIPS]](https://substackcdn.com/feed/podcast/1084089/post/153389370/2d1909e2fbbd5c9267a782756c04d8a3.jpg)